Computational Meta-Psychology

Table of Contents

- 1. AI is to Understand Ourself

- 2. Color Renormalization

- 3. Computational Monism

- 4. What algorithm should a robot use? - Solomonoff Induction

- 5. AI has made huge progress in encoding data

- 6. Inceptionism looks like Psychedelic

- 7. Representation by Simulation

- 8. Causal Model

- 9. Computation vs Mathematics

- 10. Mental Representation

- 11. But AI progressed the opposite way

- 12. Our Hardware

- 13. Brain boots itself in 80 mega seconds

- 14. Storage Capacity of Brain: 100GB to 2.5PB

Notes from Computational Meta-Psychology - 32c3 by Josch Bach

1. AI is to Understand Ourself

We are system that find meaning in patterns - Best way to understand us is to build systems

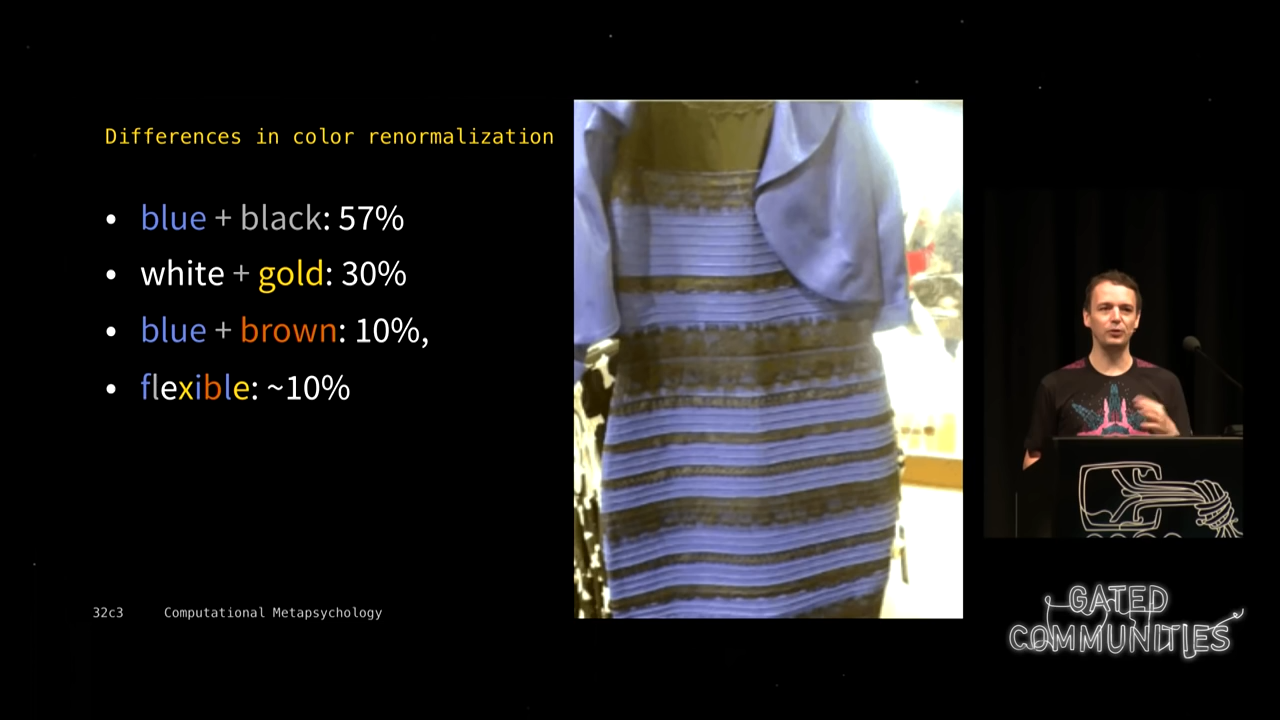

2. Color Renormalization

0:02:37 Minds cannot know objective truths (outside of mathematics) but they can generate meaning

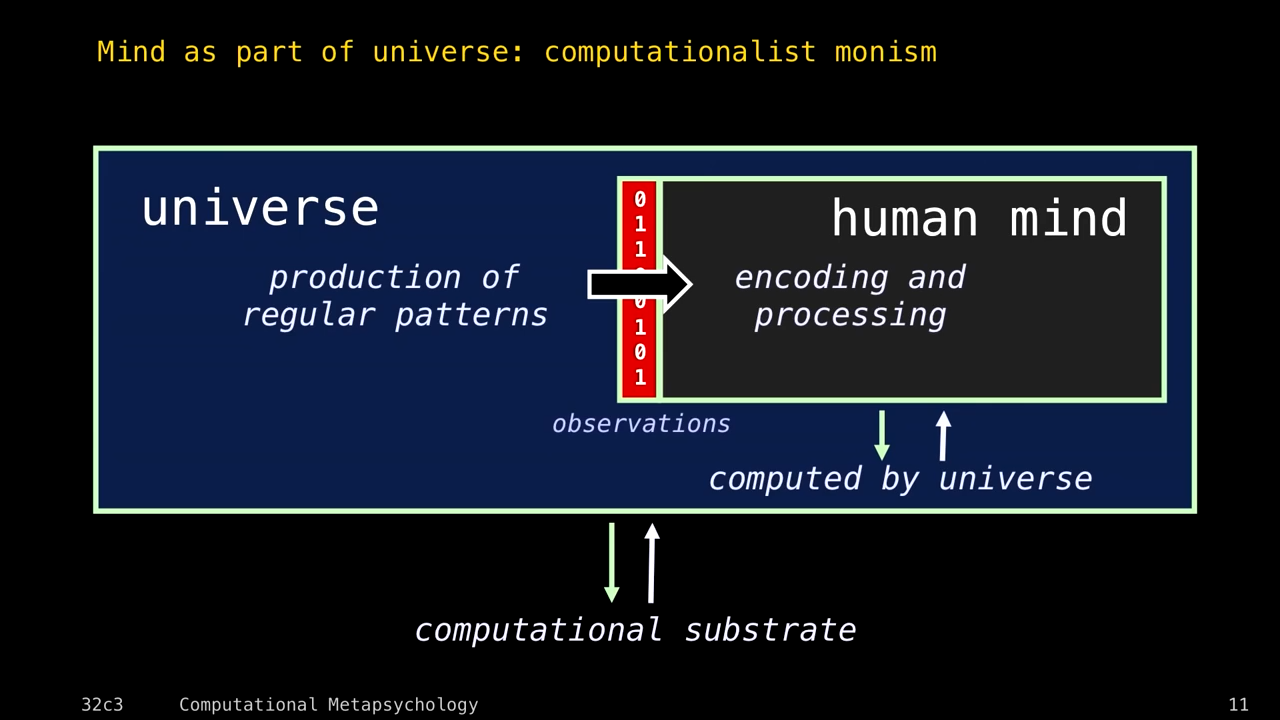

3. Computational Monism

We make sense of the data at out systemic interface.

4. What algorithm should a robot use? - Solomonoff Induction

Bayesian Reasoning + Induction + Occam's Razor = Solomonoff Induction

Solomonoff Induction is not computational, so we can only approximate it. So, we can't get to the truth.

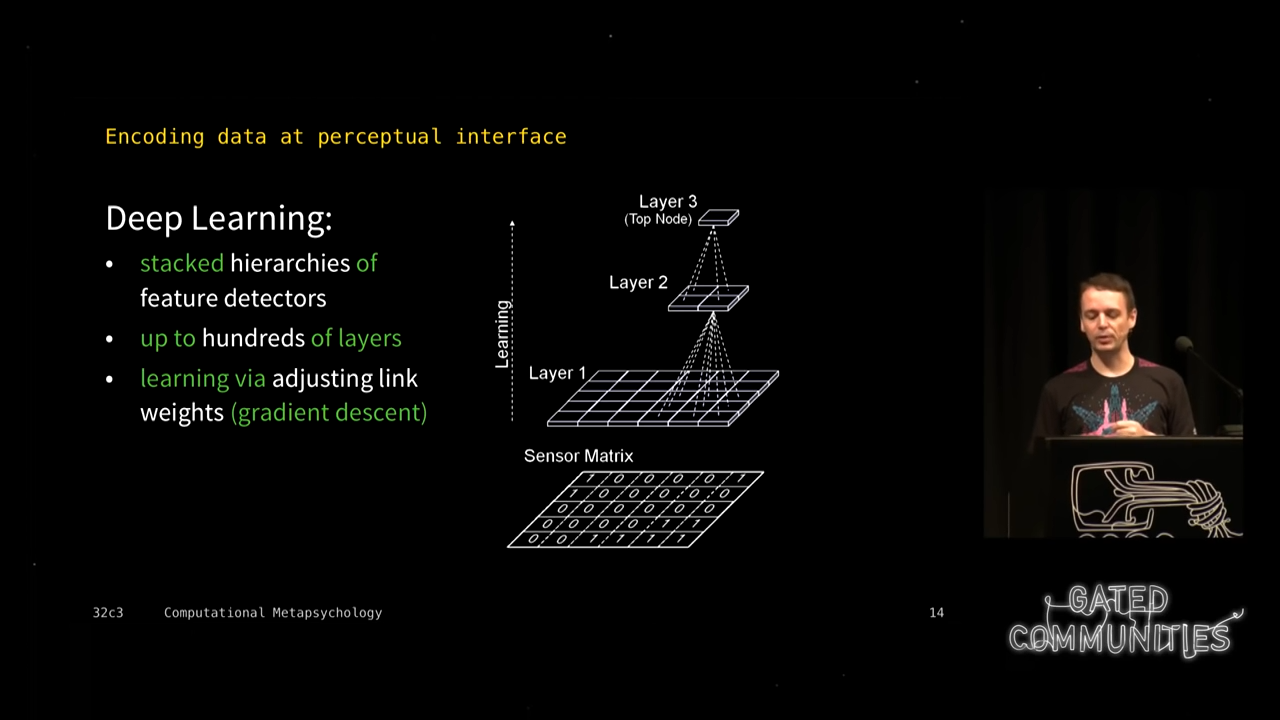

0:05:01 We get meaning by suitable encoding.

5. AI has made huge progress in encoding data

6. Inceptionism looks like Psychedelic

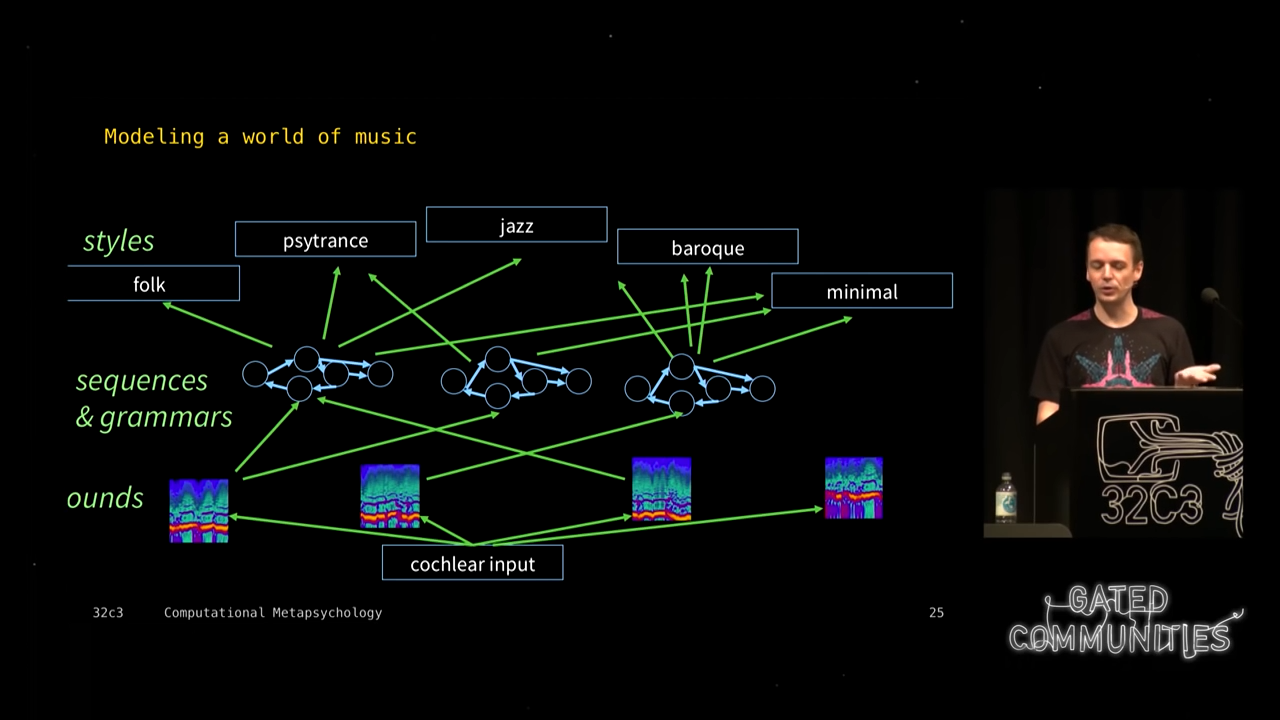

7. Representation by Simulation

Campbell and Foster, 2015

Sounds = Percept Sequences & Grammar = Simulation Concept = Styles

8. Causal Model

8.1. Associative model - Weakly Determined model

8.2. Algorithm - Strongly determined model

0:09:44

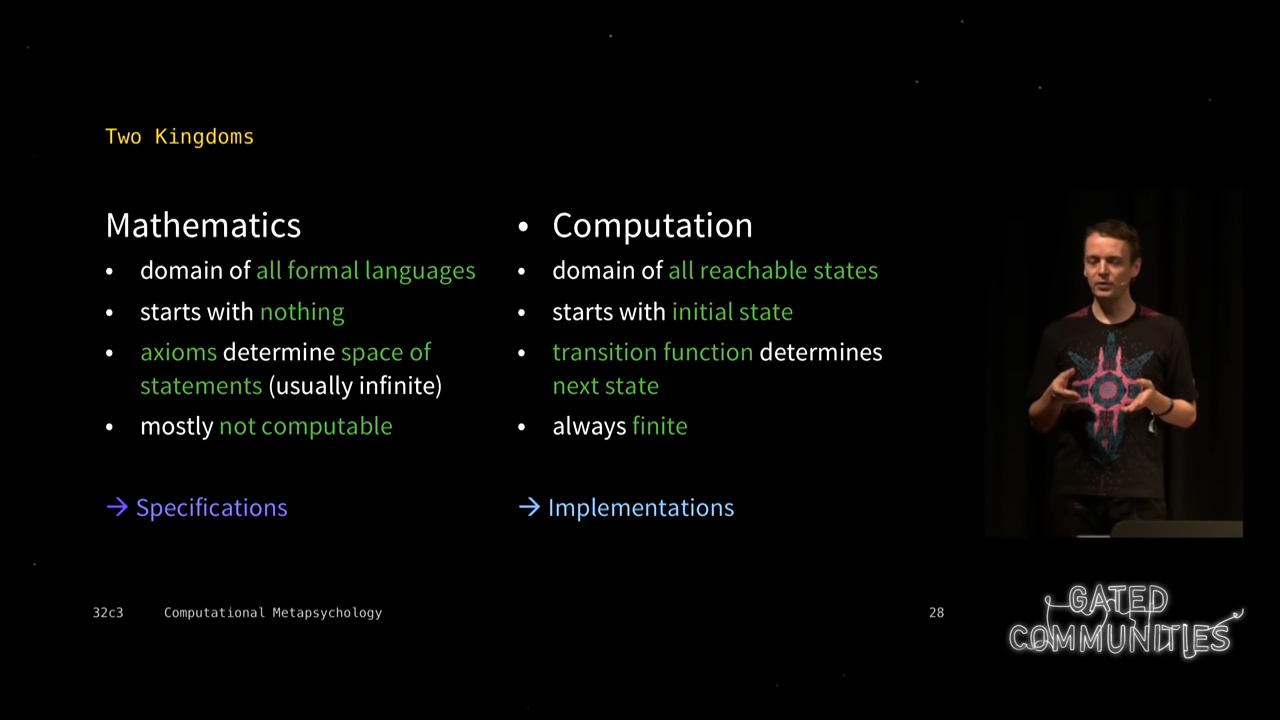

9. Computation vs Mathematics

Nothing is mathematics. Mathematics is a domain of formal language - it doesn't exist.

Computation can exist

- you start with an state

- then apply transition function

- it is finite

We can understand mathematics beacause our brain can compute some part of mathematics. Very little of it, and with little complexity. But enough so that can map some of the inifite complexity to computational patterns.

10. Mental Representation

10.1. Precepts

10.2. Mental Simulation

10.3. Coneptual Representation

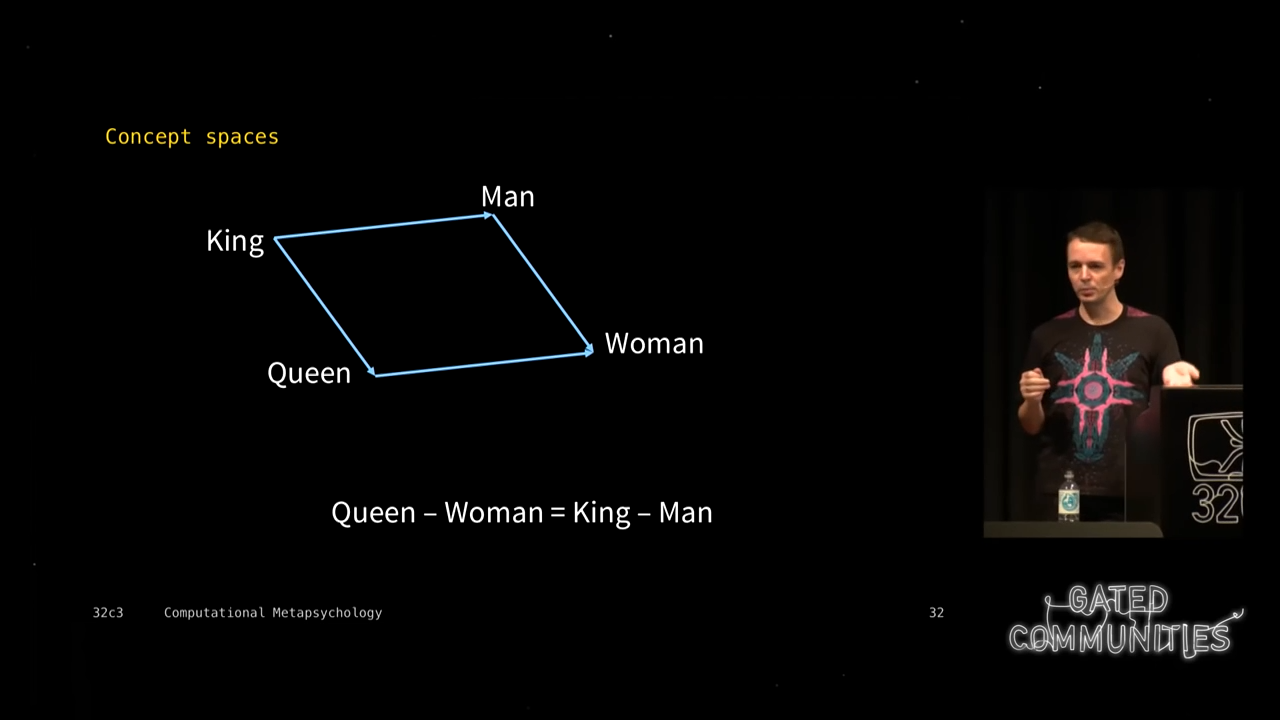

10.3.1. Concept Spaces

- Interface (often shared between individuals)

- compositional

- can be described using high-dimentional vector spaces

- we don't do simulation and prediction but can capture regularity

Because it is high level vector spaces, we can do interesting things like machine translation without understanding what it means; i.e. without any proper mental representations

So, concept space is a mental representation that is incomplete.

10.4. Linguistic Protocol

To transfer mental representation to others.

11. But AI progressed the opposite way

Progression of AI Models

- Linguistic protocols (formal grammars)

- Concept spaces

- percepts

- mental simulations

- Attention directed, motivationally connected systems

12. Our Hardware

For brain: about 5% of the 2% is for brain another 10% of 2% is for things related to brain

so, to code for brain, we need ~500kb of codes.

The code of the universe doesn't define what this planet or you look like similary this code (genome) define the rules for how the brain is built.

13. Brain boots itself in 80 mega seconds

- dimension learnign

- basic model of the world so that we can operated in a world

by 80 mega seconds i.e. 3.5 years a child can make sense of the world

- we are awake for about 1.5 Giga second if we reach old age

0:16:44 by this time we can make < 5Million concepts

14. Storage Capacity of Brain: 100GB to 2.5PB

Maybe a neuron doesn't matter too much. There is lot of redundancy. So, 100 GB maybe is better estimate

0:18:12